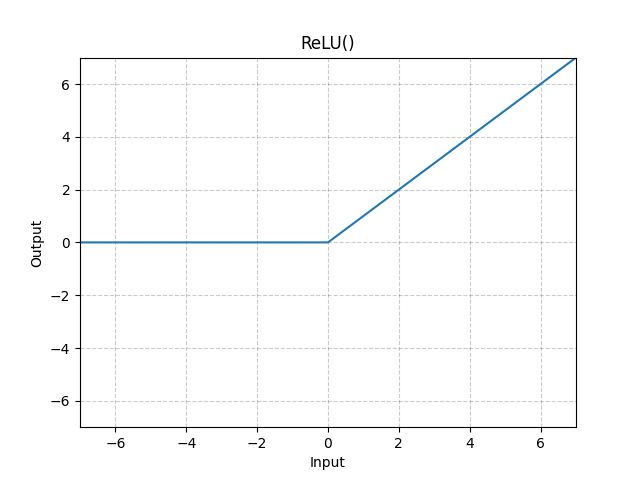

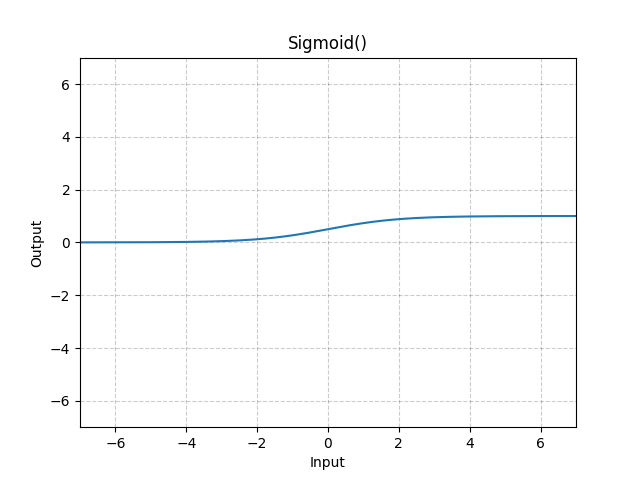

Loading... # pytorch学习3 ## 神经网络 ### nn.Module的使用 简单案例继承nn.Module类,重新实现构造\__init__和forward【必须重写】方法: pycharm继承父类方法:code→override methods ~~~python import torch from torch import nn class MyModel(nn.Module): # 网络结构 def __init__(self) -> None: super().__init__() # 前向传播 def forward(self, input): output = input + 1 return output test = MyModel() x = torch.tensor(1.0) output = test(x) # 没有显式调用forward,实际等价于test.forward(x) print(output) ~~~ 输出: ~~~ tensor(2.) ~~~ **补充**: 可以直接把上面代码作为方法使用的原因在于类里的\__call__:该方法的功能类似于在类中重载 () 运算符,使得类实例对象可以像调用普通函数那样,以“**对象名()**”的形式使用。 ### 卷积层(convolution layer) conv2d():在由多个输入平面组成的输入信号上应用 2D 卷积;包含矩阵计算   常用参数: - **in_channels** ( [*int*](https://docs.python.org/3/library/functions.html#int) ) – 输入图像中的通道数,即上图蓝色部分 - **out_channels** ( [*int*](https://docs.python.org/3/library/functions.html#int) ) – 卷积产生的通道数,即上图绿色部分 - **kernel_size** ( [*int*](https://docs.python.org/3/library/functions.html#int) *or* [*tuple*](https://docs.python.org/3/library/stdtypes.html#tuple) ) – 卷积核的大小,可以设置为3,或者(3,3),即上图灰色部分 - **stride** ( [*int*](https://docs.python.org/3/library/functions.html#int) *or* [*tuple*](https://docs.python.org/3/library/stdtypes.html#tuple) *,* *optional* ) -- 卷积的步幅。默认值:1,上图一次挪动的步数 - **padding** ( [*int*](https://docs.python.org/3/library/functions.html#int) *,* [*tuple*](https://docs.python.org/3/library/stdtypes.html#tuple)*或*[*str*](https://docs.python.org/3/library/stdtypes.html#str) *,* *optional* ) – 添加到输入的所有四个边的填充。默认值:0,上图padding为1 - **padding_mode** (*字符串**,**可选*) – `'zeros'`, `'reflect'`, `'replicate'`或`'circular'`. 默认:`'zeros'` **卷积转换** ~~~python import torch import torchvision from torch import nn from torch.nn import Conv2d from torch.utils.data import DataLoader dataset = torchvision.datasets.CIFAR10(root='./data', train=False, download=True, transform=torchvision.transforms.ToTensor()) dataloader = DataLoader(dataset, batch_size=4) class MyModel(nn.Module): def __init__(self): super(MyModel, self).__init__() self.conv1 = Conv2d(in_channels=3, out_channels=6, kernel_size=3, stride=1, padding=0) def forward(self, x): x = self.conv1(x) return x test = MyModel() print(test) img, target = data[0] output = test(img) print(img.shape) print(output.shape) writer.close() ~~~ **输出**:改变了通道数和图片大小 ~~~ MyModel( (conv1): Conv2d(3, 6, kernel_size=(3, 3), stride=(1, 1)) ) torch.Size([4, 3, 32, 32]) torch.Size([4, 6, 30, 30]) ~~~ ### 池化层(pooling layer) 池化操作:取窗口内最大值(不同于卷积核) MaxPool2d(): 参数 - **kernel_size** – 最大的窗口大小 - **stride**——窗口的步幅,默认值为`kernel_size` - **padding** – 要在两边添加隐式零填充 - **dilation** – 控制窗口中元素步幅的参数 - **return_indices** - 如果`True`,将返回最大索引以及输出 - **ceil_mode** – 如果为 True,将使用ceil而不是floor来计算输出形状【即多出部分不在计算最大值舍去】 eg: ~~~python import torch from torch import nn from torch.nn import MaxPool2d input = torch.tensor([[1, 2, 0, 3, 1], [0, 1, 2, 3, 1], [1, 2, 1, 0, 0], [5, 2, 3, 1, 1], [2, 1, 0, 1, 1]], dtype=torch.float32) # RuntimeError: “max_pool2d“ not implemented for ‘Long‘:必须要转换input数据类型 input = torch.reshape(input, (-1, 1, 5, 5)) # 给定其他即可计算-1位的值 print(input.shape) class MyModel(nn.Module): def __init__(self): super(MyModel, self).__init__() self.maxpool1 = MaxPool2d(kernel_size=3, ceil_mode=True) def forward(self, input): output = self.maxpool1(input) return output test = MyModel() output = test(input) print(output) ~~~ ceil_mode:True(只有最左上角3*3正方形中计算了最大值) ~~~ torch.Size([1, 1, 5, 5]) tensor([[[[2.]]]]) ~~~ ceil_mode:False ~~~ torch.Size([1, 1, 5, 5]) tensor([[[[2., 3.], [5., 1.]]]]) ~~~ ### 非线性激活(Non-linear Activations) **ReLU(x)**=max(0, x)  ~~~python import torch from torch import nn from torch.nn import ReLU input = torch.tensor([[1, -0.5], [-1, 3]]) output = torch.reshape(input, (-1, 1, 2, 2)) print(input.shape) class MyModel(nn.Module): def __init__(self): super(MyModel, self).__init__() self.relu1 = ReLU() def forward(self, input): output = self.relu1(input) return output test = MyModel() output = test(input) print(output) ~~~ 输出: ~~~ torch.Size([2, 2]) tensor([[1., 0.], [0., 3.]]) ~~~ **Sigmoid(*x*)**=$\frac{1}{1+e^{-x}}$  ~~~python import torch import torchvision from torch import nn from torch.nn import ReLU, Sigmoid from torch.utils.data import DataLoader from torch.utils.tensorboard import SummaryWriter dataset = torchvision.datasets.CIFAR10(root='./data', train=False, download=True, transform=torchvision.transforms.ToTensor()) dataloader = DataLoader(dataset, batch_size=64) input = torch.tensor([[1, -0.5], [-1, 3]]) output = torch.reshape(input, (-1, 1, 2, 2)) print(input.shape) class MyModel(nn.Module): def __init__(self): super(MyModel, self).__init__() self.relu1 = ReLU() self.sigmoid1 = Sigmoid() def forward(self, input): output = self.sigmoid1(input) return output test = MyModel() writer = SummaryWriter("logs_relu") step = 0 for data in dataloader: imgs, targets = data writer.add_images("input", imgs, step) outputs = test(imgs) writer.add_images("output", outputs, step) step += 1 writer.close() ~~~  ### 线性层 ~~~python import torch import torchvision from torch import nn from torch.nn import Linear from torch.utils.data import DataLoader dataset = torchvision.datasets.CIFAR10(root='./data', train=False, download=True, transform=torchvision.transforms.ToTensor()) dataloader = DataLoader(dataset, batch_size=64, drop_last=True) class MyModel(nn.Module): def __init__(self): super(MyModel, self).__init__() self.linear1 = Linear(196608, 10) def forward(self, input): output = self.linear1(input) return output test = MyModel() for data in dataloader: imgs, targets = data print(imgs.shape) output = torch.reshape(imgs, (1, 1, 1, -1)) torch.flatten(imgs) print(output.shape) output = test(output) print(output.shape) ~~~ 报错:RuntimeError: mat1 and mat2 shapes cannot be multiplied (1x49152 and 196608x10)需要在Dataloader中添加drop_last=True 输出 ~~~ Files already downloaded and verified torch.Size([64, 3, 32, 32]) # 初始 torch.Size([1, 1, 1, 196608]) # 展开为一维 torch.Size([1, 1, 1, 10]) # …… ~~~ torch.flatten(imgs)等同于torch.reshape(imgs, (1, 1, 1, -1)),即将tensor转为一维张量 最后修改:2022 年 08 月 19 日 © 允许规范转载 打赏 赞赏作者 支付宝微信 赞 如果觉得我的文章对你有用,请随意赞赏